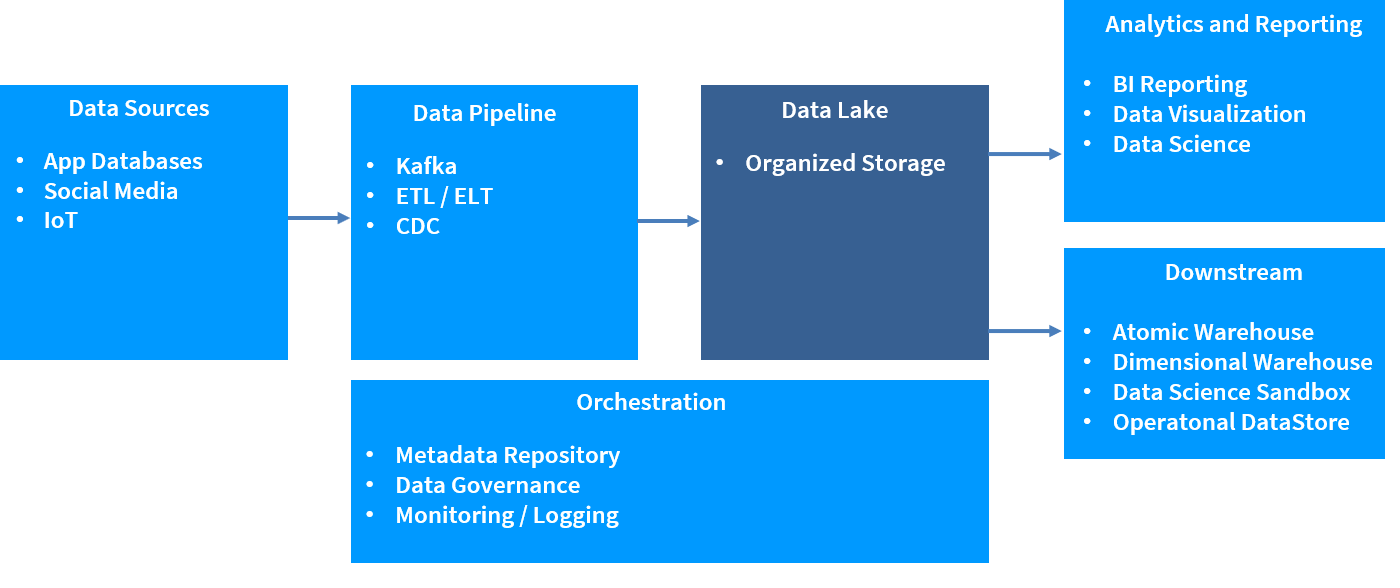

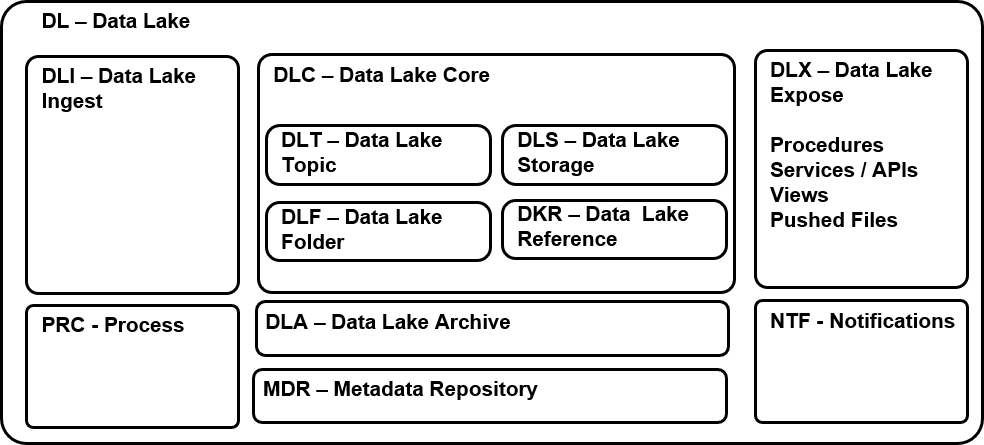

The Data Lake is the centralized, organized and secure repository where "Big Data" is ingested, stored and analyzed and the many Vs are supported. Data is stored in a large scale file system such as HDFS or blobs. A huge volume of data into the petabytes and beyond can be stored. A great variety of data formats is supported: structured, semi-structured and unstructured. A high velocity of data arriving at a speedy pace is absorbed by the data lake. Removing data issues may be required to provide a high degree of data veracity. Maximizing value returned by the Data Lake is the bottom line.

Leading data storage platforms for Data Lake include:

The two largest users of the Data Lake are data scientists and down stream systems. Data Scientists access the Data Lake as part of analytics and may extract the data for use in Data Science Sandboxes. Downstream systems such as the Atomic Warehouse receive data from the Data Lake for specific purposes. Organizations that implemented Data Lakes outperformed similar companies by nearly 9% according to an Aberdeen survey.

These benefits and capabilities may be realized through the use of the Data Lake:

These are the top Data Lake terms that you need to know:

| Term | Definition |

|---|---|

| Apache Avro | an open source row-oriented, highly compressed data format in HDFS. It includes schema definition. |

| Apache HAWQ | Hadoop native SQL query engine which high performance queries on data stored in HDFS. HAWQ is relatively new (incubator status) and has better performance than Hive. |

| Apache HDFS | Hadoop Distributed File System |

| Apache Hive | An early Hadoop native SQL-like query engine. Hive is batch oriented. |

| Apache Impala | Hadoop native SQL query engine for analytics which provides improved performance over Hive. It was release in 2013. |

| Apache Kafka | Leading stream-processing sotware platform. It is used to transport data to the Data Lake for ingestion. Donated by LinkedIn to Apache. Commercially extended by Confluent. |

| Apache ORC | an open source column-oriented HDFS data format - similar to Parquet. |

| Apache Parquet | an open source column-oriented HDFS data format - similar to ORC. |

| Data Lake | a centralized,, orgranized and secure repository where large voumes of data are ingested, stored and analyzed. |

| Data Swamp | A data lake gone wrong. |

| MPP DBMS | A DataBase Management System with Massively Parallel Processing. MPP enables high performance, scalable processing by spreading work across multiple processing nodes. |

| SQL | Structured Query Language is an ANSI Standard computer language commonly used to access data stored in databases. |

The Data Lake datastore contains massive volume data which is stored in raw format matching its data sources. Data Lake typically stores data in blobs or flat files organized into folders. Storage of unstructed data such as images and documents are use cases for the Data Lake. The data lake may use a file system such as the Hadoop File System (HDFS) which stores multiple copies of data to enable rapid, parallel retrieval.

The Data Lake tends to be composed of the following zones or similar zones with different names:

These articles provide insight into the Data Lake:

Infogoal.com is organized to help you gain mastery.

Examples may be simplified to facilitate learning.

Content is reviewed for errors but is not warranted to be 100% correct.

In order to use this site, you must read and agree to the

terms of use, privacy policy and cookie policy.

Copyright 2006-2020 by Infogoal, LLC. All Rights Reserved.